MR-Sense: A Mixed Reality Environment Search Assistant for Blind and Visually Impaired People

Object detection meets Extended Reality. We present MR-Sense a mixed reality guidance tool utilizing deep learning for blind and visually impaired people.

IEEE AIxVR, Hollywood 2024

Abstract

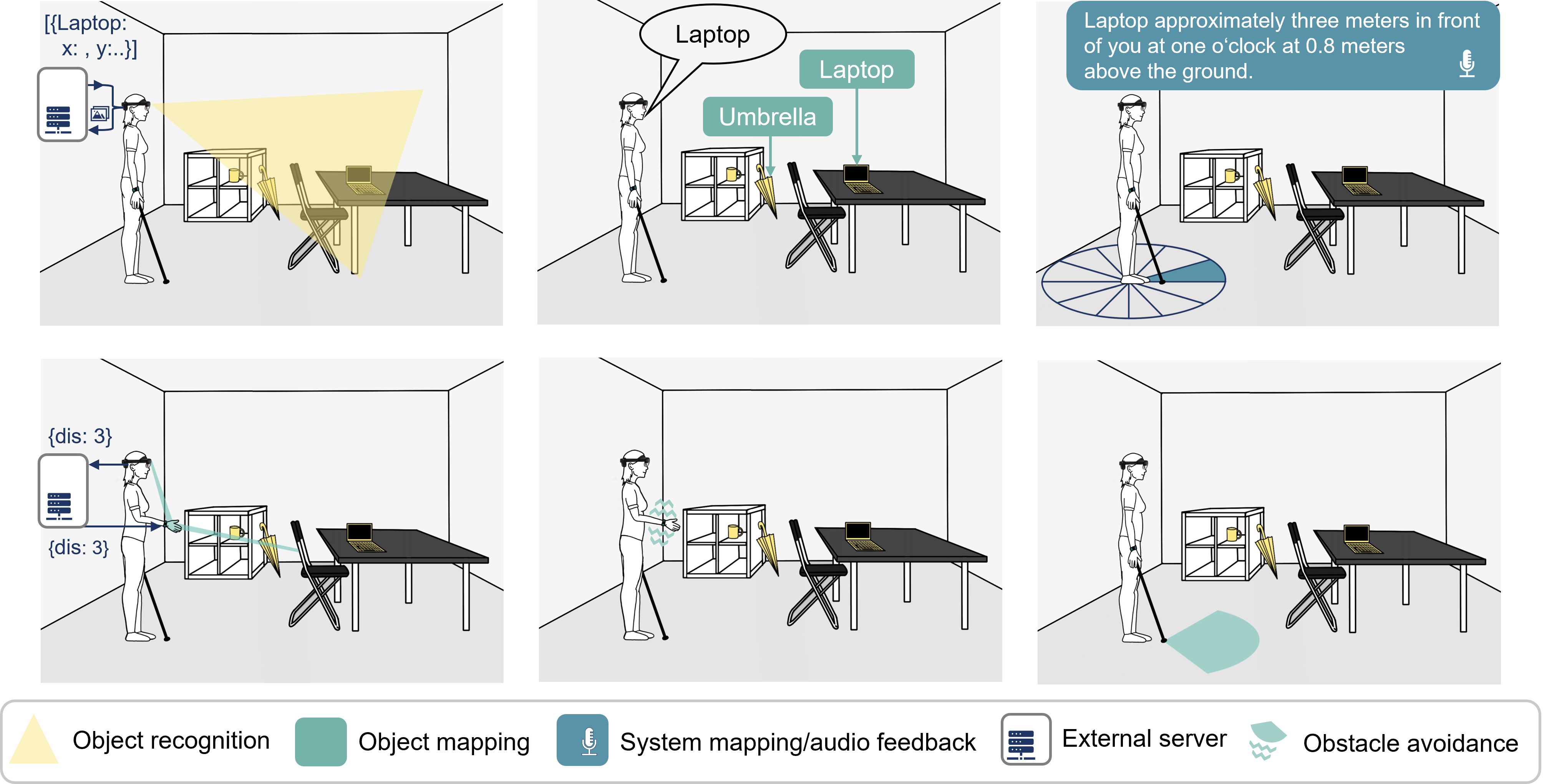

Search tasks can be challenging for blind or visually impaired people. To determine an object's location and to navigate there, they often rely on the limited sensory capabilities of a white cane, search haptically, or ask for help. We introduce MR-Sense, a mixed reality assistant to support search and navigation tasks. The system is designed in a participatory fashion and utilizes sensory data of a standalone mixed reality head-mounted display to perform deep learning-driven object recognition and environment mapping. The user is supported in object search tasks via spatially mapped audio and vibrotactile feedback. We conducted a preliminary user study including ten blind or visually impaired participants and a final user evaluation with thirteen blind or visually impaired participants. The final study reveals that MR-Sense alone cannot replace the cane but provides a valuable addition in terms of usability and task load. We further propose a standardized evaluation setup for replicable studies and highlight relevant potentials and challenges fostering future work towards employing technology in accessibility.

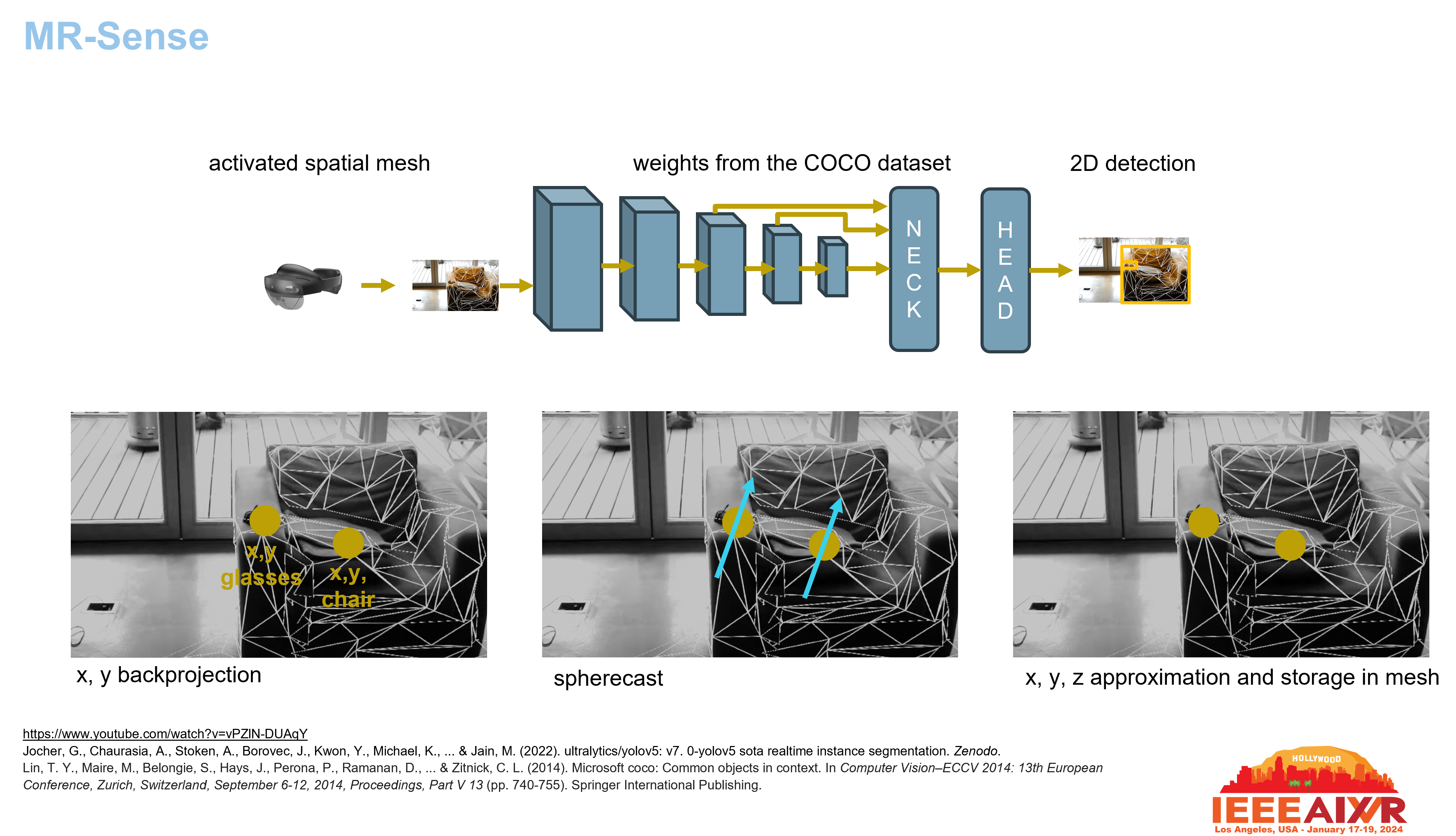

System

MR-Sense is built using Unity, Python and Swift and runs on a HMD (HoloLens 2), a smartwatch (Apple Watch Series 6), and an external server. The external server executes YOLOv5 to detect objects in the image stream of the Hololens and the Apple Watch is used to provide vibrotactile feedback.

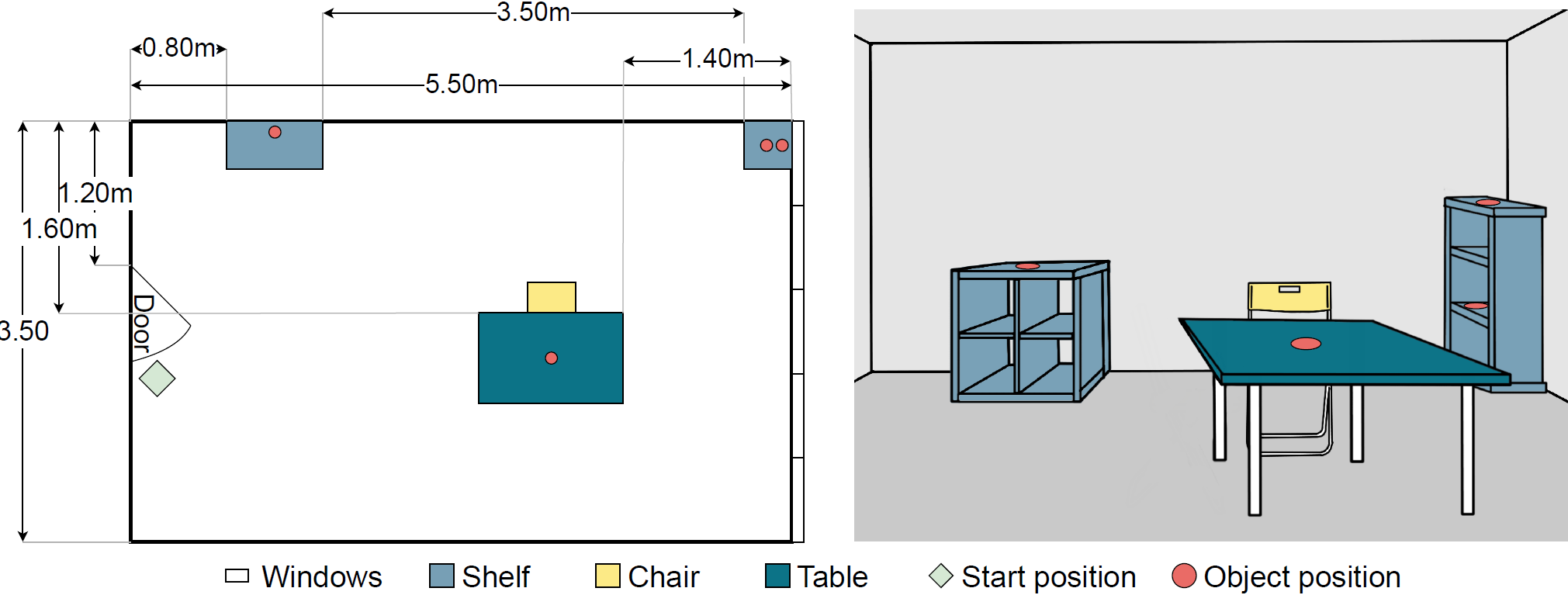

Reproducibly Study Apparatus

As a realistic and reproducible experimental setup, we prepared a common indoor room with two shelves, a table and a chair. All items were purchased at IKEA

- KALLAX, white, 77x39x77 cm

- KALLAX, white, 42x39x112 cm

- LINNMON / ADILS Desk darkgrey/black, 100x60x73 cm

- GUNDE Chair, black

As search objects, we selected a banana, a laptop, a bottle and a flower (these four objects are within the 80 categories of the COCO dataset mentioned above).

Citation

TBA

We gratefully acknowledge funding for this study by d.hip campus